TCP throughput test according to RFC 6349¶

This task follows ► IETF RFC 6349, which is a framework for TCP throughput testing. The RFC describes a practical methodology for measuring end-to-end TCP throughput in an IP network. The objective of the RFC was to provide a better throughput indication which takes the user experience into account.

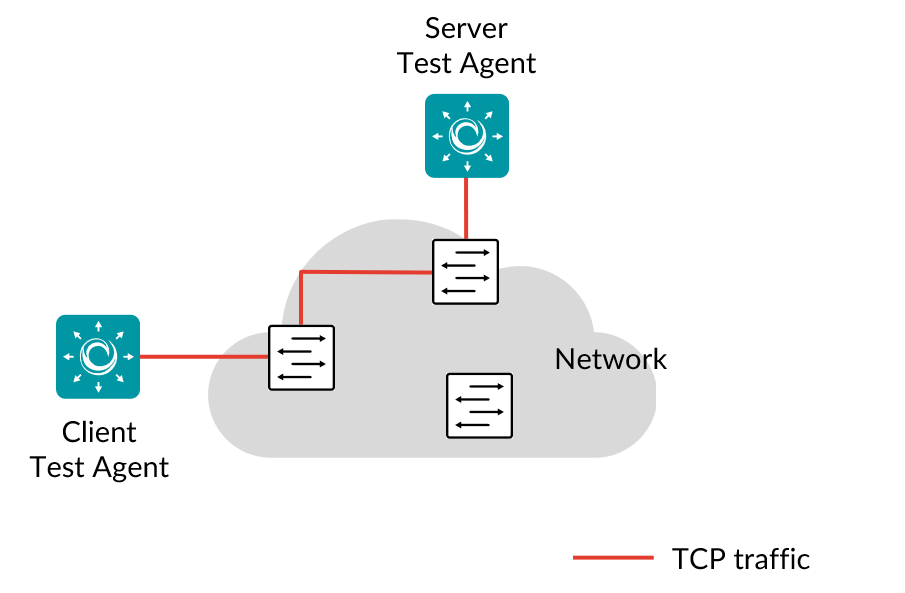

The TCP throughput test is conducted between two Test Agents, one acting as server and the other acting as client, as illustrated below.

The test is executed in the following steps:

Step 1: Path MTU (Maximum Transmission Unit) is measured in the selected traffic direction or directions. This is accomplished by running a TCP session that measures the path MTU. If the measured MTU is lower than what is currently configured on that interface, the test will end with an error. You then need to adjust the interface’s MTU setting. Note that the measurement can never return an MTU size larger than what is currently configured, even if larger MTUs are otherwise supported in the network path.

Step 2: The baseline RTT (round-trip time) is measured. This is accomplished by sending ICMP echo requests (pings) from the client to the server. Based on the measured RTT and the supplied bottleneck bandwidth (BB) values, we can calculate the bandwidth-delay product BDP = BB × RTT for each traffic direction.

The BDP is the minimum sending and receiving socket buffer size required to reach the bottleneck bandwidth (minus overheads) with the given RTT value. The calculated buffer size is then multiplied by 20 (40 for bidirectional tests); this is needed as a safety margin, and also because the Linux kernel’s buffer usage is not very efficient. The larger of the buffer sizes thus obtained is used for both directions.

The bottleneck bandwidth is the Layer 2 capacity of the network path tested. This rate includes everything up to the Ethernet header.

Step 3: A number of TCP sessions are started with appropriate socket buffer settings as determined in step 2. You can request a specific number of TCP sessions; otherwise the number is automatically calculated based on the BDP. Max: 20 sessions.

The buffer size calculated in step 2 is the combined buffer size for all flows, so if multiple flows are used, each flow will use a part of that buffer. You can also request a specific buffer size setting per flow. If the bottleneck bandwidth cannot theoretically be reached with the given number of flows and buffer size, then the test will end with an error.

During this phase the ping measurement is still running, and RTT statistics are collected.

Step 4: The measurement is stopped, and statistics are collected. The buffer delay percentage shows how much the RTT increased while loading the TCP connection: (RTT – RTT_baseline) / RTT_baseline × 100. If there is a substantial increase, the socket buffer size calculated in step 2 might not be sufficient. In that case it is advisable to rerun the test with a manually adjusted buffer size value.

The TCP transfer time ratio shows the ratio of actual to ideal download time for a hypothetical file. This should be the same as the ratio between the theoretically achievable throughput and the measured combined TCP throughput. The theoretically possible throughput (TCP goodput) is calculated from the given BB by subtracting the overheads.

The TCP efficiency is the ratio of total received bytes to total sent bytes. A value less than 100% here indicates TCP retransmissions.

The test fails if the measured rates are lower than the rate thresholds, or the TCP efficiency is lower than the threshold set, or the transfer time ratio is higher than the threshold set.

Note that socket buffer sizes cannot be arbitrarily large. First, there is a system limit on the buffer size that can be allocated to a single TCP socket. Second, there is a system limit on the total buffer size that all TCP sessions can use. Finally, there is the limitation of the physical memory in the Test Agents. The test prints the system limits to the logs. If any limit is violated, the test ends with an error.

This task works only with IPv4.

Note

This test requires exclusive access to the Test Agent, meaning that no other tests or monitors can be assigned to the Test Agent.

Prerequisites¶

To run a TCP throughput test you need to have two Test Agents installed. If you haven’t already done the installation, consult the installation guides found here.

Then create a new TCP test and fill in the mandatory parameters below:

Parameters¶

General¶

Server: The Test Agent interface that will act as server.

Client: The Test Agent interface that will act as client.

Server port: The server TCP port. Range: 1 … 65535. Default: 5000.

Traffic direction: The direction(s) of TCP traffic. One of: Upstream (from client to server), Downstream (from server to client), or Bidirectional (in both directions at the same time). Default: Downstream.

Bottleneck bandwidth from server to client (Mbit/s), Bottleneck bandwidth from client to server (Mbit/s): Both of these bandwidths are specified on the Ethernet level and include the CRC but not the Inter Frame Gap, Preamble, or Start of Frame Delimiter. On a 100 Mbit/s interface, the maximum throughput is around 98.7 Mbit/s. Range: 0.1 … 10,000 Mbit/s. No default.

DSCP: The Differentiated Services Code Point or IP Precedence to be used in IP packet headers. The available choices are listed in the drop-down box. Default: “0 / IPP 0”.

VLAN priority (PCP): The Priority Code Point to be used in the VLAN header. Range: 0 … 7. Default: 0.

Test duration (seconds): The duration of this test step in seconds. Min: 30 s. Max: 600 s. Default: 60 s.

Wait for ready: Time to wait before starting this test step. The purpose of inserting a wait is to allow all Test Agents time to come online and acquire good time sync. Min: 1 min. Max: 24 hours. Default: “Don’t wait”, i.e. zero wait time.

Thresholds¶

Rate (down) for pass criteria (Mbit/s): The server-to-client TCP rate threshold for passing the test. Range: 0.1 … 10,000 Mbit/s. No default.

Rate (up) for pass criteria (Mbit/s): The client-to-server TCP rate threshold for passing the test. Range: 0.1 … 10,000 Mbit/s. No default.

Transfer time ratio (down): Threshold for server-to-client transfer time ratio, i.e. the maximum allowed ratio of actual to ideal TCP transfer time. Range: 1 … 1000. No default.

Transfer time ratio (up): Threshold for client-to-server transfer time ratio, i.e. the maximum allowed ratio of actual to ideal TCP transfer time. Range: 1 … 1000. No default.

TCP efficiency down (%): Threshold for server-to-client TCP efficiency, i.e. the minimum required ratio of total received bytes to total sent bytes. Range: 0.1 … 100 %. No default.

TCP efficiency up (%): Threshold for client-to-server TCP efficiency, i.e. the minimum required ratio of total received bytes to total sent bytes. Range: 0.1 … 100 %. No default.

Advanced settings¶

Number of streams, optional: The number of TCP sessions to use. (Optional.) If not specified, the number will be calculated automatically. Range: 1 … 20. No default.

Buffer size (KiB), optional: Socket buffer size to use for both sending and receiving. (Optional.) If not specified, the size will be set automatically. Range: 4 … 9765 KiB. No default.

Max send rate down (Mbit/s), optional: Server-to-client maximum TCP send rate for the test. Range: 0.1 … 10,000 Mbit/s. No default.

Max send rate up (Mbit/s), optional: Client-to-server maximum TCP send rate for the test. Range: 0.1 … 10,000 Mbit/s. No default.

Result metrics¶

MTU (bytes): The actual measured path MTU in the selected traffic direction(s), i.e. the maximum frame size supported by all included network equipment on the specified path.

Baseline RTT (ms): Baseline round-trip time.

RTT under load (ms): Round-trip time under TCP load.

Buffer delay (%): Buffer delay percentage, showing how much the round-trip time increased during the application of TCP load.

TCP throughput (Mbit/s): TCP throughput achieved.

TCP transfer time ratio: The ratio between actual and ideal TCP transfer time, or (equivalently) the theoretically possible TCP throughput and the measured TCP throughput.

TCP efficiency (%): The ratio of total received bytes to total sent bytes. If this ratio is less than 100%, it indicates that TCP retransmissions have occurred.

Pass/fail outcome of test.

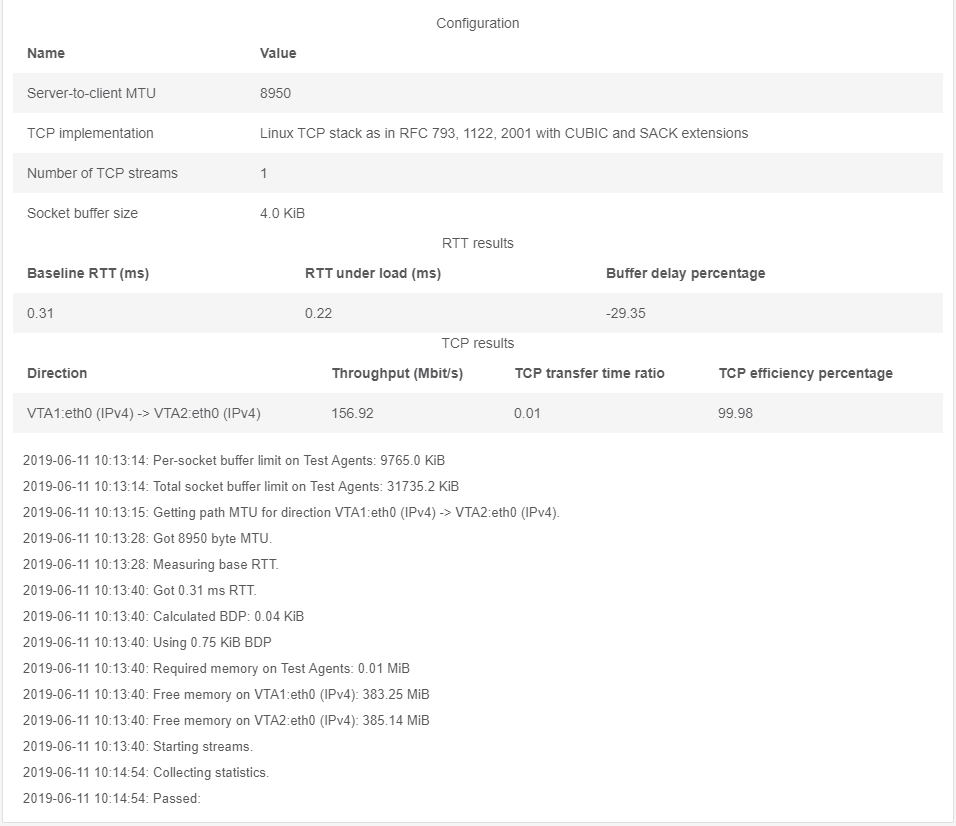

The picture below shows an example of results obtained from an RFC 6349 TCP throughput test.